AKA Deploying a fully working NerdDinner website in 15 minutes and 50 lines of code

The goal of this post is to show, one straightforward step at a time, how you can integrate PowerUp into your solution.

We will start with downloading the “NerdDinner” MVC sample, and finish by having two completely independent and full working versions of the site on your local machine.

Don’t worry if this looks long – it is just because I haven’t left out a single detail. Also, the thing about PowerUp is that it is very layered – the deployment we are working on will just become gradually more sophisticated. You can actually stop at any step and you will have still produced something useful.

Here is some links to other parts of the page so you know where you are up to:

- Step Zero – Prerequisites

- Step One – Download NerdDinner

- Step Two – Download and copy PowerUp

- Step Three – Create your nant build file

- Step Four – Run your first build

- Step Five – Alter the build to include the compiled output

- Step Six – Start by deploying the files

- Step Seven – Create a website

- Step Eight – Introducing deployment settings

- Step Nine – Using the settings within deploy.ps1

- Step Ten – Deploying to another environment

- Step Eleven – Introducing file templating (usually for configs)

- Step Twelve – Create a template for connectionstrings.config

- Step Thirteen – Create the new setting for database.folder

- Step Fourteen – Witness connectionstring.config being substituted during deployment

- Step Fifteen – Change main.build and deploy.ps1 to deploy the database files

- Step Sixteen – Why you would want to execute parts of the deployment on other machines

- Step Seventeen – Run web-deploy remotely

- Summary

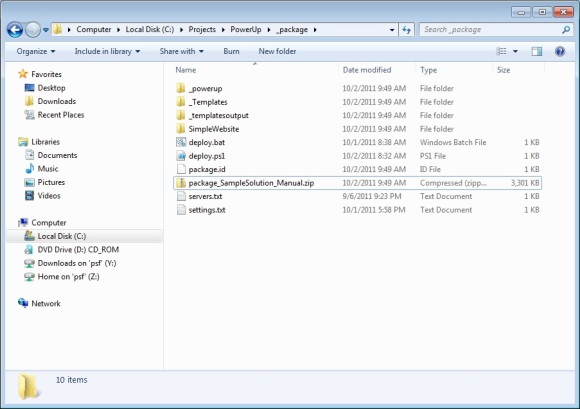

You can get everything going just from the steps below. If you prefer to have a fully working copy to look at, download the final solution as a zip file here (which is from the GitHub repo here).

Step Zero – Prerequisites

Remember to check you have the required prerequisites. Even better, make sure the QuickStart build runs fine. If it doesn’t, its unlikely that this step by step guide will work.

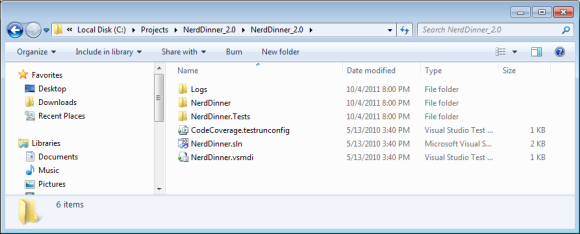

Step One – Download NerdDinner

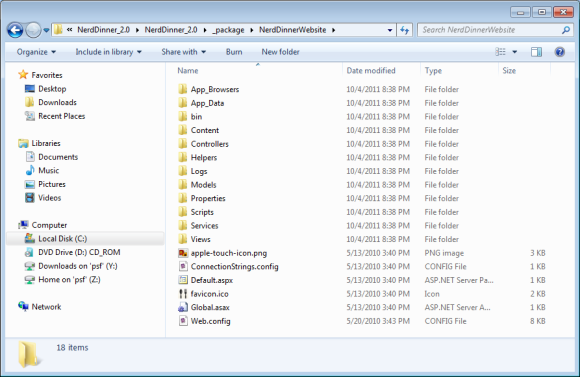

Download the NerdDinner Codeplex Zip File and extract this anywhere on your machine. It should have the following contents.

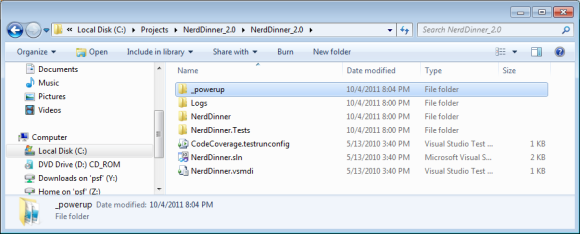

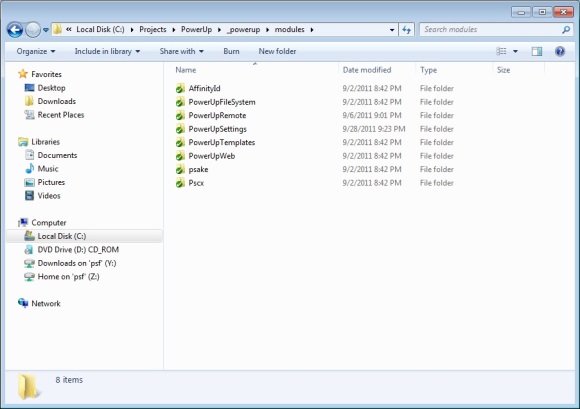

Step Two – Download and copy PowerUp

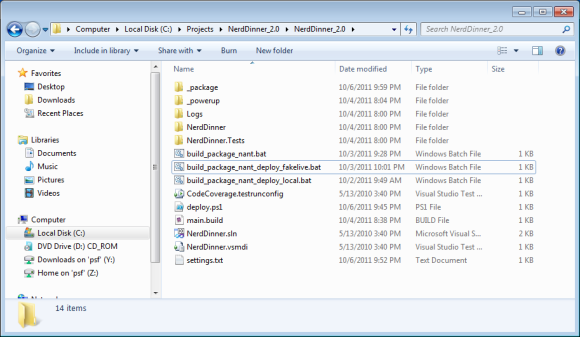

Download PowerUp and place the contents of the contained _powerup folder into the root folder of NerdDinner. The end result will look like this:

Step Three – Create your nant build file

At the root of NerdDinner, create a new file called main.build. Within this file, add the following code:

<project default="build-package-common"> <include buildfile="_powerup\common.build" /> <property name="solution.name" value="NerdDinner" /> <target name="package-project"> </target> </project>

In short, this includes a reference to the common build file, tells PowerUp the name of the solution file, and creates a (stub package-project) target.

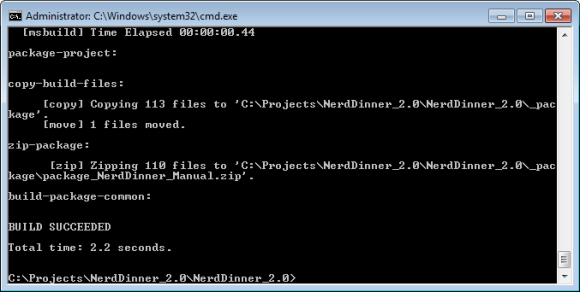

Step Four – Run your first build

Open a commandline prompt at the root of NerdDinner. Within this prompt, run the following:

_powerup\nant\bin\nant

This will run nant on your main.build file. The output will look like:

To make this easy to run again, create a batch file (build_package_nant.bat) with the following code:

_powerup\nant\bin\nant pause

Step Five – Alter the build to include the compiled output

As the build currently stands, PowerUp is happily compiling the NerdDinner solution, and creating a basic package folder.

You might notice that the output of the NerdDinner solution isn’t in there – we need to actually tell PowerUp what to include for this to happen.

To make this happen, alter main.build to include these lines:

<project default="build-package-common">

<include buildfile="_powerup\common.build" />

<property name="solution.name" value="NerdDinner" />

<target name="package-project">

<copy todir="${package.dir}\NerdDinnerWebsite" overwrite="true" flatten="false" includeemptydirs="true">

<fileset basedir="${solution.dir}\NerdDinner">

<include name="**"/>

<exclude name="**\*.cs"/>

<exclude name="**\*.csproj"/>

<exclude name="**\*.user"/>

<exclude name="obj\**"/>

<exclude name="lib\**"/>

</fileset>

</copy>

</target>

</project>

What we have added is a basic copy target to copy all the necessary files from NerdDinner into the package.

Run the build again, and you should see NerdDinnerWebsite appear inside the package folder.

With that folder having these contents:

Step Six – Start by deploying the files

You might recall that PowerUp is roughly split into two steps – building a package, and deploying that package. Up until now, we have been working on the packaging. Its time to start deploying.

To get this under way, create a file called deploy.ps1 in the root of the folder. Start with the contents:

include .\_powerup\commontasks.ps1

task deploy {

import-module powerupfilesystem

$packageFolder = get-location

copy-mirroreddirectory $packageFolder\nerddinnerwebsite c:\sites\nerddinner

}

This (psake) file first dot includes the common tasks (to hook up with some useful defaults), then copies the contents of the NerdDinnerWebsite folder to c:\sites\nerddinner.

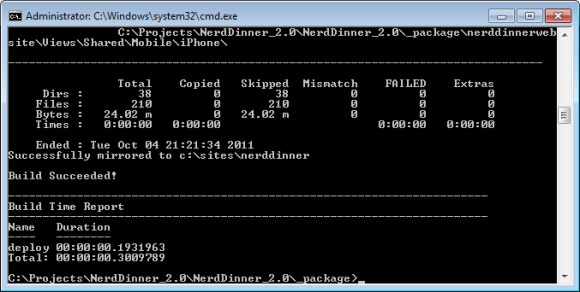

To execute this, open a command prompt in the _package directory and run:

deploy local

This will produce the following output:

And if you look in c:\sites\nerddinner, you will now see the contents of the NerdDinnerWebsite folder from the package.

To make later deployments easier, create a new file in the root called build_package_nant_deploy_local.bat with the contents:

_powerup\nant\bin\nant cd _package call deploy local cd .. pause

Step Seven – Create a website

During this step we will be altering the deployment script to automatically create a website pointing to the website folder. Alter deploy.ps1 to have the following contents:

include .\_powerup\commontasks.ps1

task deploy {

import-module powerupfilesystem

import-module powerupweb

$packageFolder = get-location

copy-mirroreddirectory $packageFolder\nerddinnerwebsite c:\sites\nerddinner

set-webapppool "NerdDinner" "Integrated" "v4.0"

set-website "NerdDinner" "NerdDinner" "c:\sites\nerddinner" "" "http" "*" 20001

}

Notice the extra lines at the bottom where we create an appool, and also create a website.

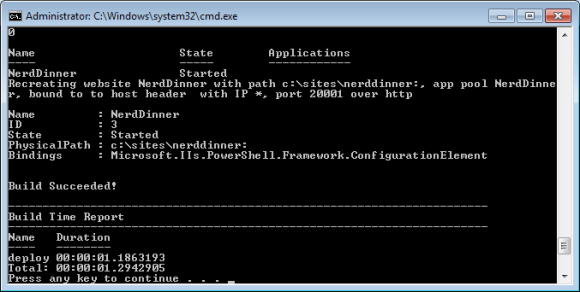

Rerun the build and deployment (build_package_nant_deploy_local.bat)

Thats the output of a successful website deployment!

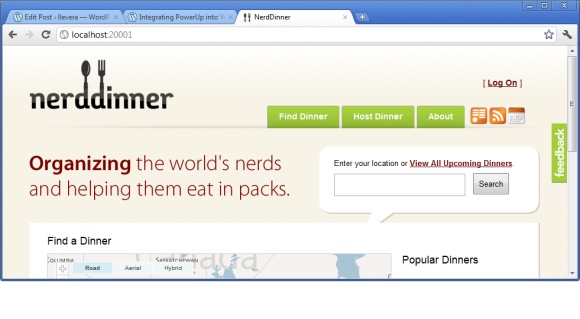

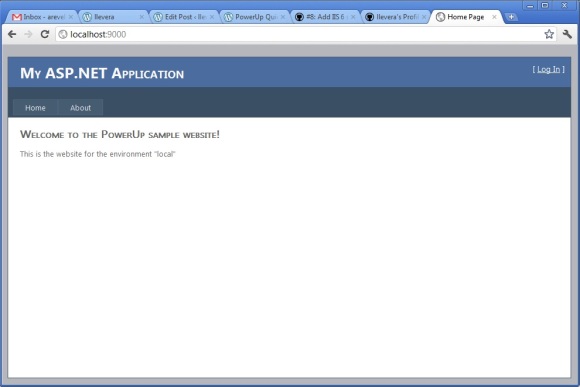

Now browse to http://localhost:20001 to see the NerdDinner website in action.

Step Eight – Introducing deployment settings

At first look, you might think we have finished the job. We have a fully working website, after all. The problem is this package is only going to work on one environment. Take a look again at this snippet from deploy.ps1:

copy-mirroreddirectory $packageFolder\nerddinnerwebsite c:\sites\nerddinner set-webapppool "NerdDinner" "Integrated" "v4.0" set-website "NerdDinner" "NerdDinner" "c:\sites\nerddinner" "" "http" "*" 20001

This screams “hard coded to hell”. Its all very well deploying to c:\sites onto your local machine. But what about staging or live? They might have the web folder in e:\webroot. Also if you want to have two branches of this code base on the test server, how will you ensure they each have their own website? Thats where deployment profiles and settings come in.

Our first step in this direction is to introduce a settings file. Create the file settings.txt in the root, with the contents:

local website.name NerdDinner web.root c:\sites http.port 20001

Step Nine – Using the settings within deploy.ps1

Now you have settings, you can use these within your deploy.ps1. How? Just refer to them (by key) like you would any Powershell variable. So in this case, change your deploy.ps1 file to:

include .\_powerup\commontasks.ps1

task deploy {

import-module powerupfilesystem

import-module powerupweb

$packageFolder = get-location

copy-mirroreddirectory $packageFolder\nerddinnerwebsite ${web.root}\${website.name}

set-webapppool ${website.name} "Integrated" "v4.0"

set-website ${website.name} ${website.name} ${web.root}\${website.name} "" "http" "*" ${http.port}

}

Notice how this is almost the same script as from step seven. All that has changed is that we have replaced the hard coded file paths and website parameters with the setting names.

Run build_package_nant_deploy_local.bat and notice the website deploys again and still runs exactly as before.

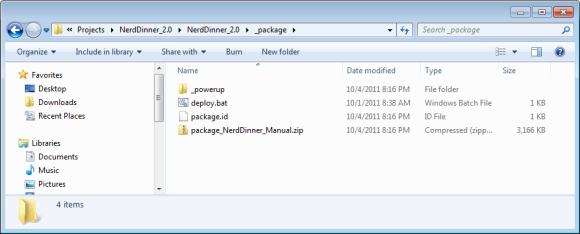

Step Ten – Deploying to another environment

Now we have settings and a parameterised build file, we can easily deploy to other environments by simply adding additional deployment profiles. Normally this would target another server (test, staging, live etc), but in this case I’m going to assume you don’t have many other machines lying around so we are going to have to “fake it” by deploying to another location on your machine.

Start by changing the settings.txt file to:

default web.root c:\sites local website.name NerdDinner http.port 20001 fakelive website.name NerdDinnerFakeLive http.port 20002

This has created the new deployment profile, “fakelive”. Also notice the “default profile” – this is a special, reserved, profile that all others inherit from (so common settings don’t have to be repeated).

Now to deploy this new profile, all you need to do is rebuild the package and deploy to “fakelive”. The following batch file will do this:

_powerup\nant\bin\nant cd _package call deploy fakelive cd .. pause

This is absolutely identical to previous deployments except we have change the argument to “deploy” from “local” to “fakelive”.

Save this to build_package_nant_deploy_fakelive.bat in the root and run it. You will now have a second copy of the site, serving from http://localhost:20002.

This demonstrates the core tenet of PowerUp – the ability to deploy to any number of environments from a single package.

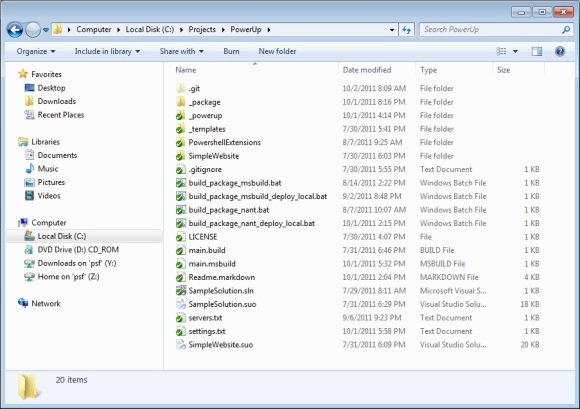

As a double check, your solution folder should now look like this:

Step Eleven – Introducing file templating (usually for configs)

Hopefully the above shows the value of being able to use settings with your build script. There is another very common case where deployments need to vary by environment. That is the difference in the contents of the files you are deploying. This is almost always config files, such as web.config or app.config.

There are various common existing techniques to deal with this issue, ranging from having multiple config files (web.config.staging, web.config.live) and swapping them around at deploy time, or using Visual Studio Config Transformations. PowerUp (by default) chooses a different technique – that of file templating, a ndusing the same settings file used by deploy.ps1 to substitute in the correct values at deploy time. We believe this centralization of settings holds massive advantages.

I’m going to show this in action by templating NerdDinner’s connectionstrings.config.

If you look at this file (I won’t paste it here, as its quite long), you will see 3 connection strings. At the moment they all bind to to |DataDirectory|, which looks in App_Data for the database files (NerdDinner.mdf etc).

Lets say, for the sake of argument, we want these files to exist somewhere else on disk, and we want this location to be different on each environment.

To achieve this, we are going to template the database file folder in connectionstrings.config and create a deployment profile setting to make this different for local and fakelive.

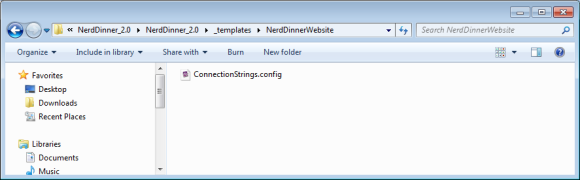

Step Twelve – Create a template for connectionstrings.config

Starting at the root folder:

- Create a new folder called _templates.

- Within that folder, create another folder called NerdDinnerWesbite

- Copy into that folder the connectionstrings.config file from NerdDinner website

Open up connectionstrings.config and replace |DataDirectory| with “${database.folder}\”. Doing this introduces the placeholders to be replaced during deployment. For example, the first setting should read:

<add name=”ApplicationServices” connectionString=”data source=.\SQLEXPRESS;Integrated Security=SSPI;AttachDBFilename=${database.folder}\aspnetdb.mdf;User Instance=true” providerName=”System.Data.SqlClient”/>

Step Thirteen – Create the new setting for database.folder

Change settings.txt to now include the setting database.folder, ie:

default

web.root c:\sites

database.folder c:\databases\${website.name}

local

website.name NerdDinner

http.port 20001

fakelive

website.name NerdDinnerFakeLive

http.port 20002

Also notice how settings can reference each-other (c:\databases\${website.name} – a useful trick to keep the settings file compact.

Step Fourteen – Witness connectionstring.config being substituted during deployment

Run build_package_nant_deploy_local.bat to deploy another package.

Open C:\sites\NerdDinner\connectionstring.config – you should see that the placeholder for ${database.folder} has been replaced with “c:\databases\NerdDinner”.

Now run build_package_nant_deploy_fakelive.bat, and open C:\sites\NerdDinnerFakeLive\connectionstring.config. In this deployment, the value is “c:\databases\NerdDinnerFakeLive” instead.

This is config substitution in action, and hopefully shows how any part of the config file can be templated, handling all the common cases of connection strings, webservice urls etc.

Step Fifteen – Change main.build and deploy.ps1 to deploy the database files

To get the NerdDinner database files into these directories, we are going to need to firstly change the packaging to copy them from their current location in App_Data, and then change the deployment to copy them to their folders under c:\databases.

So first, change main.build to package the databases from app_data, ie:

<project default="build-package-common">

<include buildfile="_powerup\common.build" />

<property name="solution.name" value="NerdDinner" />

<target name="package-project">

<copy todir="${package.dir}\NerdDinnerDatabases" overwrite="true" flatten="false" includeemptydirs="true">

<fileset basedir="${solution.dir}\NerdDinner\app_data">

<include name="**"/>

</fileset>

</copy>

<copy todir="${package.dir}\NerdDinnerWebsite" overwrite="true" flatten="false" includeemptydirs="true">

<fileset basedir="${solution.dir}\NerdDinner">

<include name="**"/>

<exclude name="**\*.cs"/>

<exclude name="**\*.csproj"/>

<exclude name="**\*.user"/>

<exclude name="obj\**"/>

<exclude name="lib\**"/>

</fileset>

</copy>

</target>

</project>

Then change deploy.ps1 to read:

include .\_powerup\commontasks.ps1

task deploy {

import-module powerupfilesystem

import-module powerupweb

$packageFolder = get-location

copy-mirroreddirectory $packageFolder\nerddinnerwebsite ${web.root}\${website.name}

if (!(Test-Path ${database.folder}))

{

copy-mirroreddirectory $packageFolder\NerdDinnerDatabases ${database.folder}

}

set-webapppool ${website.name} "Integrated" "v4.0"

set-website ${website.name} ${website.name} ${web.root}\${website.name} "" "http" "*" ${http.port}

}

The relevant addition is to the copying of the NerdDinnerDatabases folder from the package to the database directory (if it doesn’t already exist, to prevent overwriting).

Running build_package_nant_deploy_local.bat and build_package_nant_deploy_fakelive.bat should now distribute those database files to c:\databases\nerdinner and c:\databases\nerdinnerlive respectively.

Browser http://localhost:20001 and http://localhost:20002 to see the sites still ticking along.

If you perform a few functions (register an account etc), you will see they are running off independent databases.

Step Sixteen – Why you would want to execute parts of the deployment on other machines

Again, you might be excused for thinking we are done. The deployment and config files are now good for any number of environments.

But have a quick think. What would happen if wanted to a) run this script from a Continuous Integration server deploying to a test server or b) deploy files or create websites on more than one server (for example a load balanced environment).

At the moment, our deploy.ps1 has the severe restriction that it all runs on the same machine. In the CI case, this means that if this script was run, it would create the websites on the CI server (or agent). Hardly ideal!

But don’t worry, the solution is quite simple.

Step Seventeen – Run web-deploy remotely

Start by altering deploy.ps1 as follows:

include .\_powerup\commontasks.ps1

task deploy {

run web-deploy ${web.servers}

}

task web-deploy {

import-module powerupfilesystem

import-module powerupweb

$packageFolder = get-location

copy-mirroreddirectory $packageFolder\nerddinnerwebsite ${web.root}\${website.name}

if (!(Test-Path ${database.folder}))

{

copy-mirroreddirectory $packageFolder\NerdDinnerDatabases ${database.folder}

}

set-webapppool ${website.name} "Integrated" "v4.0"

set-website ${website.name} ${website.name} ${web.root}\${website.name} "" "http" "*" ${http.port}

}

The change is fairly subtle: We have moved most of the script from the default “deploy” task to “web-deploy”, and changed “deploy” to call web-deploy.

The key here is the “run” command. This instructs PowerUp to run the web-deploy remotely on another machine.

To get this going, we need to add a few more things. First of all, we change the setting.txt to read:

default

web.root c:\sites

database.folder c:\databases\${website.name}

local

web.servers localmachine

website.name NerdDinner

http.port 20001

fakelive

web.servers localmachine

website.name NerdDinnerFakeLive

http.port 20002

The important change here is the declaring that (for both deployment profiles) the web server is the server “local”.

Next we need a new file, called servers.txt to describe some necessary details about “local”.

So, create servers.txt in the root, with the contents:

localmachine

server.name localhost

remote.temp.working.folder \\${server.name}\c$\packages

local.temp.working.folder c:\packages

Note that (unlike setting.txt, which has no rules about which keys you create), servers.txt must have all three settings (with those exact names) for each server. These settings simply let PowerUp know what the UNC path is for the server, and the folder where it can copy your package and run deployment scripts from.

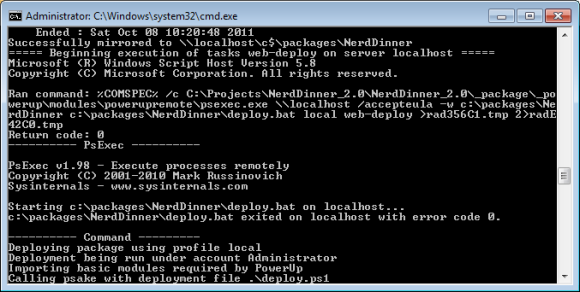

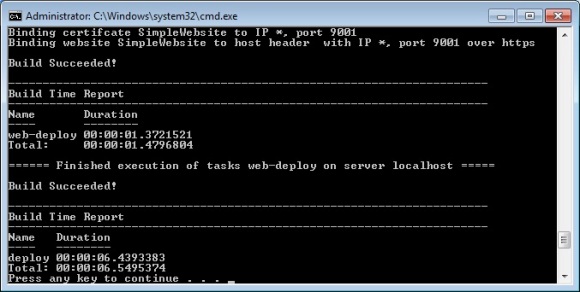

Now that is all in place, run build_package_nant_deploy_local.bat again.

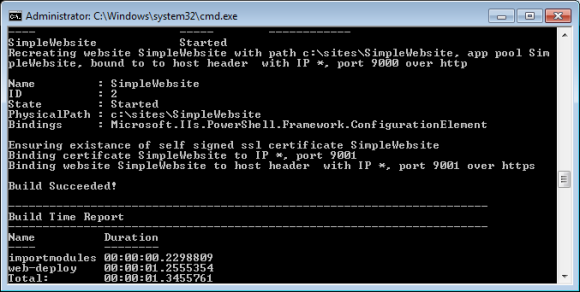

Notice how the output now changes:

At the key moment (the remote call to web-deploy), PowerUp automatically copies your package to the remote working folder, then starts the deployment again for the task web-deploy.

This can be done at any point in the script, to any server. You can even call out remotely when you are already remote (there are a lot more details to this that I will cover in later posts.)

Summary

Well there we have it. Hopefully that took way longer for me to write than it took for you to run through!

If you reflect on what has been done to make this all work, it might be quite startling how little it took. All we did to get this website buildable and deployable to multiple environments was to:

- Copy the _powerup folder

- Create main.build (23 lines)

- Create settings.txt (13 lines)

- Create deploy.ps1 (21 lines)

- Create servers.txt (4 lines)

- Template connectionstrings.config (won’t count this)

- Create a few batch files (won’t count this either)